Moderation Isn’t an Afterthought. It’s Your Platform’s Immune System

When the Lights Went Out on Reddit

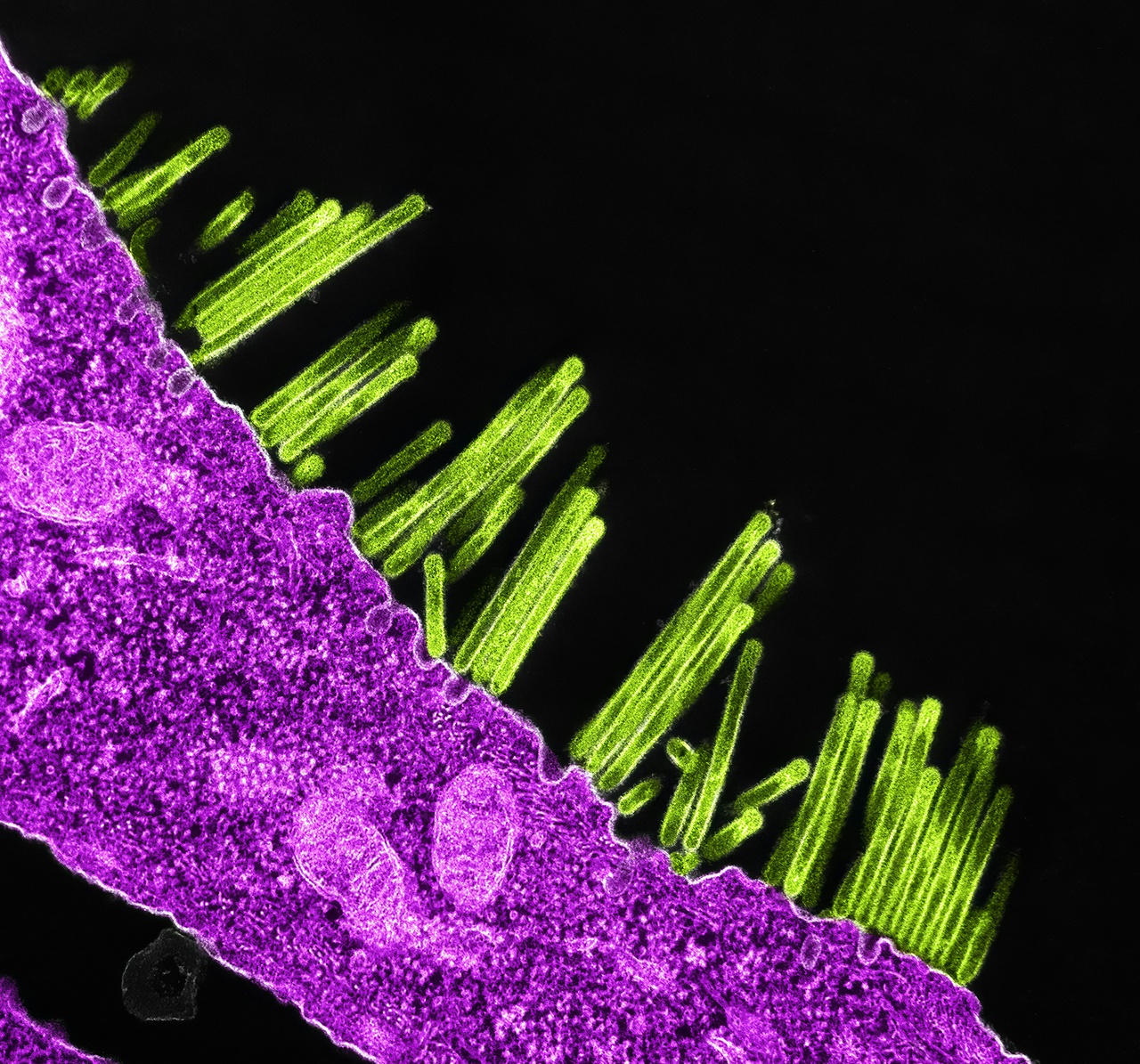

Photo: niaid

In summer 2023, large swaths of Reddit went dark. Thousands of subreddits flipped to private in one of the platform’s largest-ever protests. The spark? API pricing changes that disrupted the third-party tools moderators relied on to keep communities healthy.

It wasn’t just about dollars. It was a reminder that communities collapse when trust and safety are treated as expendable.

And Reddit isn’t an outlier.

Twitter/X showed how quickly baseline safety unravels when trust and safety staff are gutted.

Meta has faced repeated struggles moderating global elections, not simply because of scale, but because centralized systems that under-resource local expertise can’t keep up with fast-moving, language-specific, and coordinated threats.

Crises like these aren’t accidents. They’re design choices.

The alternative is to see moderation not as janitorial cleanup, but as a resilient immune system: layered, adaptive, and principled.

What Are “Exit Signs”?

Each phase of this roadmap ends with “exit signs”: clear, observable indicators that a platform is ready to evolve to the next stage. They’re not lofty goals, but pragmatic thresholds. The question is always the same: is the system stable enough to scale responsibly?

Phase 1: Safe by Default

Baseline safety is your platform’s “skin barrier.” It’s not glamorous, but without it, nothing else functions.

What it looks like

- A platform-managed moderation service covering four unavoidable risks: spam, harassment, NSFW content, and deliberate disinformation.

- Automation to handle the obvious (hash-matching for CSAM, spam filtering), with human moderation for edge cases.

- Defaults that build immediate trust: “You’re protected automatically.”

Where it works: Discord demonstrates this hybrid approach well: platform guardrails plus community moderators in every server, balancing automation with human judgment. That said, Discord also faces its own challenges (like uneven enforcement across servers) reminding us that no model is flawless.

If skipped: When Twitter/X cut back on staff, spam and abuse exploded, degrading both user trust and advertiser confidence.

Exit signs: Baseline harmful content declining, most users reporting they feel safe, and moderation response times hitting clear targets.

Phase 2: Community-Led Customization

Once the basics are stable, communities need agency. Moderation should evolve into more than protection. It should become collaboration.

What it looks like

- Independent “labelers” run by trusted partners: fact-checkers, accessibility advocates, or civic groups.

- Users stacking these filters in their settings, choosing the lenses that matter most to them.

- Platforms experimenting with sustainable support for partners through contracts, grants, or microfunding.

Where it works: Bluesky demonstrates this pluralism through its labeler system, where independent groups define moderation criteria and users layer them transparently. As with any new system, it’s still evolving, but it points toward a more participatory future

If skipped: Reddit’s blackout is what happens when communities lack genuine power-sharing. Moderators revolted because they were being asked to carry the load without meaningful partnership.

Exit signs: Labeler adoption growing, stable governance for trusted partners, and rising satisfaction scores as customization options expand.

Phase 3: User Empowerment

The mature phase is adaptability. Platforms should equip users and communities to invent their own solutions, while still providing infrastructure and oversight.

What it looks like

- User-created moderation stacks, shared blocklists, and bespoke labelers.

- Built-in governance: appeals systems, transparency dashboards, and verification of trusted creators.

- Defaults that remain simple, while advanced tools are discoverable for communities that want them.

Where it Works: In the Fediverse, Mastodon and other decentralized networks are early experiments in community-driven blocklists and moderation collectives. It’s messy, sometimes inconsistent, but generative – an evolving model of empowerment.

If ignored: Meta’s struggles to manage misinformation during elections show the brittleness of centralized-only approaches. Without empowering local actors and communities with flexible tools, global moderation will always lag behind coordinated threats.

Exit signs: User-created tools proliferating without fueling systemic abuse, independent audits confirming diversity of approaches, and appeals processes functioning transparently.

The Scale Question

Not every platform can fund a large trust and safety team on day one. That’s fine. “Safe by default” doesn’t have to mean “enterprise-scale.”

Even small startups can right-size: a part-time moderator with clear escalation protocols, lightweight misuse filters, or committed volunteer training can still establish the baseline.

The principle is resilience at your scale, not perfection.

Legal and Ethical Anchors

Moderation only works if it’s grounded. Rights and ethics aren’t handcuffs. They’re design opportunities.

- Freedom of Expression: Label over remove wherever possible.

- Equality Rights: Audit tools for bias before they scale.

- Transparency and Accountability: Publish dashboards, create appeal pathways, and allow third-party audits.

A platform legitimizes itself not when it says it values rights, but when those rights are visible in its design.

The Point

Reddit’s blackout, Twitter’s safety collapse, Meta’s battles with misinformation – these weren’t glitches. They were warnings.

Moderation isn’t maintenance. It’s care by design.

When you treat it like an immune system that’s layered, participatory, and principled, you don’t just stop harm. You create spaces where people feel safe enough to show up, contribute, and stay.

That’s what resilience looks like in practice.

Editor’s Note: This piece was drafted in June, 2025 and added here as part of my archives.